In the rapidly evolving field of machine learning, keeping track of experiments, model versions, and their performance can be a challenging task. This is where Neptune, steps in to revolutionize the way machine learning models are managed.

In this article, we will explore the features, benefits, and impact Neptune brings to scaling ML teams. By improving experiment tracking, comparison capabilities, and facilitating seamless model sharing, Neptune aims to streamline the path to production readiness and enable efficient collaboration among ML teams.

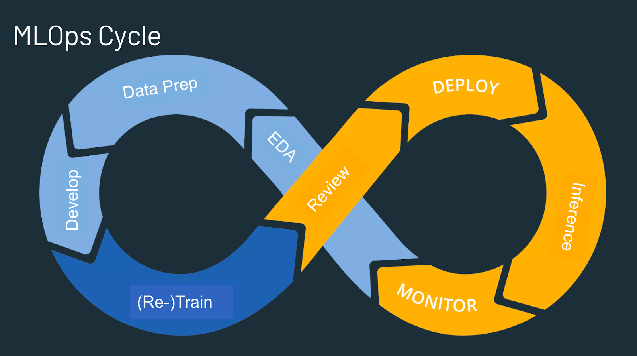

But first, what is MLOps?

MLOps stands for Machine Learning Operations. MLOps is a core function of Machine Learning engineering, focused on streamlining the process of taking machine learning models to production and then maintaining and monitoring them. MLOps is a collaborative function, often comprising data scientists, DevOps engineers, and IT.

Now that we have a glimpse of what MLOps stands for, the next question will be;

Why do we need MLOps?

Productionizing machine learning is difficult. The machine learning lifecycle consists of many complex components such as data ingest, data prep, model training, model tuning, model deployment, model monitoring, explainability, and much more.

It also requires collaboration and hand-offs across teams, from Data Engineering to Data Science to ML Engineering.

Naturally, it requires stringent operational rigor to keep all these processes synchronous and working in tandem. MLOps encompasses the experimentation, iteration, and continuous improvement of the machine learning lifecycle. And that is why where Neptune AI comes into play.

Now, let’s take a look at how Neptune helps ML teams track, compare, and share models in one place.

1. Efficient Experiment Tracking

First of all, Neptune is the MLOps stack component for experiment tracking. Neptune is designed to be the go-to platform for tracking and managing machine learning experiments. With Neptune, you no longer need to spend days debugging disorganized models and cluttered experimentation workflows.

By leveraging Neptune, you can quantify and store valuable metadata like hyperparameters, code versions, dataset versions, and experiment logs in a centralized and easily accessible location.

This organized approach to experiment tracking fosters reproducibility, enables efficient collaboration, and ultimately saves valuable time for ML practitioners.

2. Streamlined Model Comparison

One of Neptune’s key features is its ability to help you compare and evaluate different models seamlessly. With Neptune, you can effortlessly store and log multiple experiments, track their performance metrics, and visualize the results in intuitive and interactive visualizations.

This empowers ML teams to identify the best-performing models, uncover insights, and make data-driven decisions based on valuable experiment comparisons. By leveraging Neptune’s powerful model comparison capabilities, you can swiftly iterate through different model architectures and hyperparameter configurations, increasing the chances of finding the optimal solution.

3. Seamless Model Sharing

Neptune enables ML teams to share models, their artifacts, and insights in one central place, eliminating the need for long and messy model handovers. With Neptune, team members can access model artifacts, experiment data, and perform detailed analysis without delays.

This seamless model-sharing functionality breaks down barriers within ML teams, allowing for improved collaboration and knowledge sharing. By providing instant access to model artifacts, Neptune accelerates the path to production readiness and enhances the opportunities for cross-team learning and innovation.

4. Accelerating Production-Readiness

Neptune plays a pivotal role in helping ML teams reach production readiness faster. With its comprehensive experiment tracking, streamlined model comparison, and seamless model sharing capabilities, Neptune eliminates the guesswork and minimizes the debugging time required to fine-tune models.

By optimizing the experimentation process, Neptune accelerates the time taken to iterate, validate, and deploy models in production. This enables ML teams to achieve robust and performant models efficiently, leading to faster time-to-market and better ROI for machine learning initiatives.

5. Empowering Scaling ML Teams

Scaling ML teams face unique challenges. Neptune caters to these challenges by providing a platform that allows multiple team members to collaborate effectively while maintaining productivity and accountability.

By centralizing experiment tracking and facilitating smooth model sharing, Neptune ensures that ML teams have instant access to relevant information, reducing bottlenecks and enabling efficient decision-making.

The ability to compare experiments and models effortlessly encourages knowledge sharing and cultivates a culture of continuous improvement within the team.

Conclusion

Neptune is the essential MLOps tool that empowers ML teams to track, compare, and share their models efficiently. By simplifying experiment tracking, enabling seamless model comparison, and facilitating smooth model sharing, Neptune addresses the challenges faced by scaling ML teams.

With Neptune, teams can minimize debugging time, accelerate project lifecycles, and enhance collaboration, ultimately leading to faster production readiness. Whether you are an individual practitioner or part of a large ML team, Neptune brings organization, efficiency, and productivity to your machine learning workflow.

Get on board with Neptune and experience the power of streamlined experiment tracking and collaboration in the world of MLOps!

To know more about Neptune AI, Check out their website or reach out to Piotr Niedźwiedź, the CEO and Founder.

Let’s share more Ideas on AI and MLOps, connect with me on LinkedIn.